Google SEO Comprehensive Guide

In today’s digital era of 2025, search engine traffic has become one of the key factors for a website’s success. As the world’s leading search engine, Google offers a powerful channel for increasing website visibility and acquiring targeted traffic, making it essential to master its SEO (Search Engine Optimization) strategies. This article, "The Underlying Logic and Best Technical Practices of Google SEO Traffic", will systematically analyze how search engines work and operate, helping you understand how they rank content through algorithms and signals. In addition, we will dive into essential areas such as technical SEO implementation, keyword research, content architecture planning, topic development, content quality optimization, and link building—unveiling a full-chain optimization strategy from foundational logic to practical execution. The article also summarizes SEO concepts and key points, analyzes common issues and their solutions, and empowers you to stand out in the competitive search engine landscape. Whether you’re an SEO beginner or a seasoned optimization expert, this article will provide you with actionable strategies and practical tools to steadily increase your website traffic and achieve more efficient search engine visibility.

The article “The Underlying Logic and Best Technical Practices of Google SEO Traffic” provides a well-organized table of contents for readers. As the article is quite lengthy, it is recommended to bookmark it and read it sequentially when time permits or when needed. This reading method is more conducive to forming a systematic understanding of SEO; otherwise, fragmented reading may make it difficult to grasp the logical relationships between each part. Of course, you can also skip the suggestion and click the blue anchor text links in the table of contents to jump directly to the specific sections for targeted study.

- Understanding Google Search Engine

- Technical SEO

- TDU Tags

- Property Tags

- Robots Protocol (robots.txt)

- Sitemap (XML Sitemap)

- SSL Certificate (HTTPS)

- Nofollow and Dofollow

- Canonical Tags

- Index and Noindex Tags

- Schema

- Hreflang Tags

- Alt Text

- Website Structure (Navigation Menus)

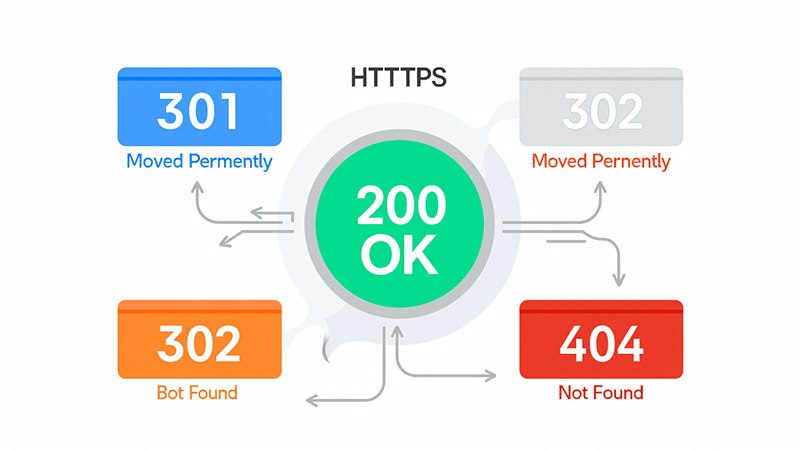

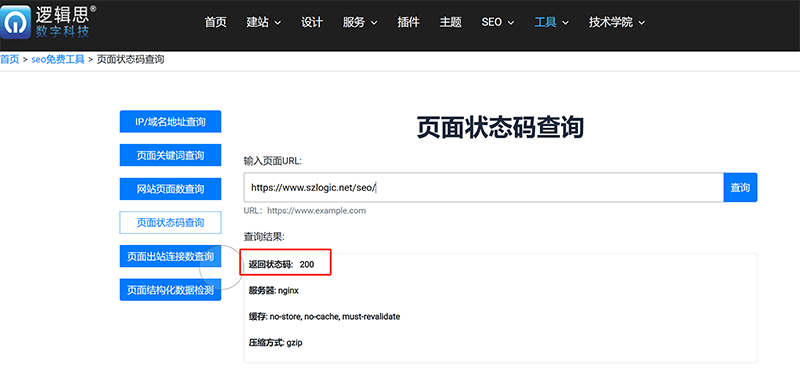

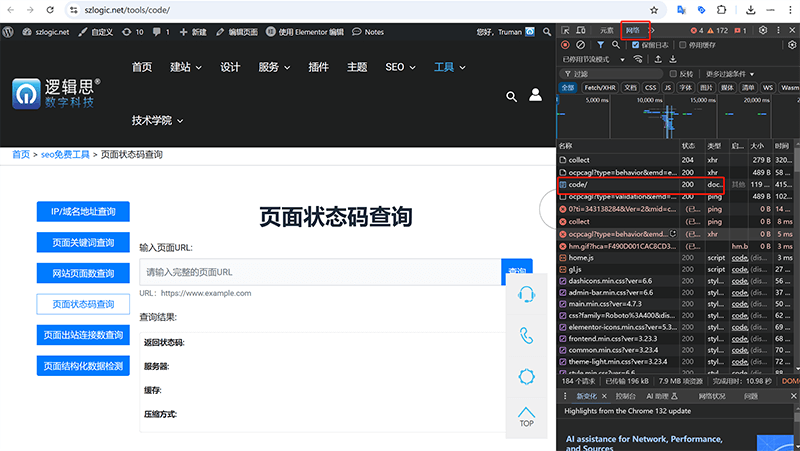

- SEO-Related Status Codes

- Submitting a Site to Search Engines

- Checking the Number of Pages Indexed by Search Engines

- Submitting New Pages or Updated Content

- SEO Keyword Research

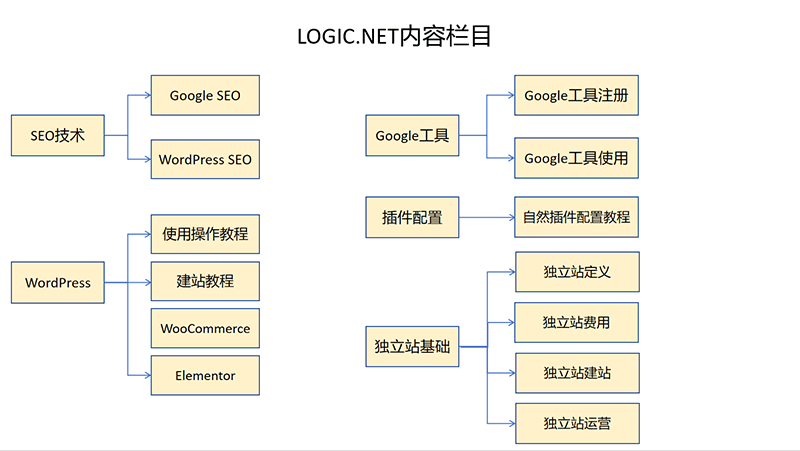

- SEO Content Planning

- SEO Topic Strategy

- SEO Content Quality Evaluation Metrics

- Ranking Signals for Page Improvement

- SEO Link Building

- Reasons for SEO Traffic (Ranking) Drops and Solutions

- Ways to Improve SEO Click-Through Rate (Increase Traffic)

- Optimize Keywords in Titles and Descriptions

- Use Attractive Title and Description Formats

- Use Rich Media (Videos, Images) to Enhance Search Result Displays

- Include Dates or Update Times

- Use Clearer and More Descriptive URLs

- Add Structured Data

- Improve Page Load Speed

- Optimize for Local Search (How to Add Google My Business Info)

Ⅰ、Understanding Google Search Engine

The methodology for effective Google SEO, like anything else, requires careful planning before action. SEO is not simply about keyword stuffing or blindly pursuing the number of backlinks; it is a systematic strategic process. Before beginning the optimization work, it is essential to have a thorough understanding of the principles and operational mechanisms of the Google search engine. The core of Google’s search engine lies in its complex algorithms, which are designed to provide users with the most relevant and valuable content. Understanding these algorithms can help us more accurately pinpoint the direction of optimization and grasp the essence of Google SEO. Success in Google SEO is not accidental; it is built on a deep understanding of the search engine's principles and operational mechanisms. Only by truly understanding how Google’s search engine works and recognizing its patterns can we position ourselves advantageously in the complex and ever-changing search environment, achieving a win-win for both website traffic and business conversions.

Understanding the Google search engine is the first step towards effective SEO optimization. The core mission of a search engine is to provide users with accurate, high-quality answers that meet their search needs. Its existence is fundamentally to answer people’s questions, not to cater to the optimization strategies of website owners or SEO experts. Whether it’s a simple query or a complex information need, Google is dedicated to presenting the most valuable content to users through its algorithms. Compared to SEO experts, search engines have a much deeper and more comprehensive understanding of users. It relies on its massive data analysis capabilities to track and analyze global search behavior in real-time, gaining insights into users’ search intent and preferences. Based on this data, Google continually adjusts and optimizes its algorithms to ensure that each search result can best meet users' needs. Therefore, attempting to rely solely on technical tricks while neglecting the quality of the content itself is often counterproductive.

The focus of the Google search engine is to provide useful answers, not to passively accept various ranking techniques. While proper SEO techniques can help a website be better recognized and understood by search engines, the real key to ranking lies in the relevance, authority, and user experience of the content. The design of search engine algorithms is intended to reward websites that focus on providing real value to users, rather than those that only emphasize external optimization methods. Additionally, Google has a zero-tolerance policy for deceptive practices and will take strong action against attempts to manipulate search results. This includes techniques like keyword stuffing, hidden text, and malicious linking. Once violations are detected, a website may face penalties such as ranking drops or even complete removal from the search engine. Maintaining transparency, fairness, and focusing on creating high-quality content for users is the correct path to achieving long-term SEO success. Understanding the essence and operational mechanisms of the Google search engine is essential knowledge for every SEO professional and website administrator. Only by truly standing in the users' shoes and creating valuable content can one gain an advantage in the highly competitive search environment.

1、The Timeliness of Google Search Engine

(1) Before 2015: The Era of Keyword Matching

The development of Google’s search engine has gone through different era stages, reflecting the continuous evolution of technological advancements and user demands. Before 2015, the Google search engine primarily operated in the keyword-matching era. During this stage, the core algorithm of Google’s search engine relied on precise matching of the keywords input by the user. The focus of website optimization was on how to strategically place keywords on the page to improve search rankings. Although this method was simple and effective, it also led to the proliferation of low-quality content, with many websites attempting to manipulate rankings through keyword stuffing, ignoring the actual value of the content itself.

(2) After 2015: The AI Era of Content Relevance to User Needs

With advancements in technology and the diversification of user demands, after 2015, search engines entered the era of artificial intelligence (AI). In this stage, search engines like Google no longer relied solely on keyword matching but instead placed greater emphasis on the relevance of content to user needs. The introduction of AI technology enabled search engines to understand semantics and analyze context, allowing for a more accurate grasp of user search intent. This shift led to profound changes in SEO optimization strategies, with content quality, user experience, and the actual value of the page becoming key factors affecting rankings. The transition of Google’s search engine from simple keyword matching to AI-based semantic understanding marked its shift from a tool-based platform to an intelligent information service. This era-defining transformation not only improved the precision of search results but also encouraged content creators to focus on providing genuinely valuable information to meet users' increasingly diverse needs.

2、How Google Views External Links (Backlinks)

Google Search Engine Algorithm considers external links (backlinks) as a key factor in evaluating the value and credibility of a webpage. When other websites link to your page, it is essentially casting a “vote of trust” for your content, signaling to search engines that the link has a certain level of value and reliability. This kind of external endorsement helps search engines determine the authority and influence of your website within a specific topic area. Moreover, backlinks are not only a technical optimization method; they also provide readers with additional contextual information, helping them better understand related topics. High-quality backlinks typically appear on pages with strong content relevance, serving as sources of supplementary information, and enhancing the user’s reading experience and ability to obtain information. Finally, backlinks often function as a form of recommendation. When a website reviews a particular product or service and includes a link to that product or service page, it acts as a recommendation to the reader. This kind of natural recommendation link not only helps increase the credibility of the linked page but also strengthens the user’s trust in the recommended content. Therefore, when evaluating backlinks, Google’s algorithm pays attention not only to the quantity but also places greater emphasis on the quality, relevance, and authority of the link source.

3、Who Is SEO Content Written For?

Who Is SEO Content Really Written For? The Answer Is Actually Quite Simple — It’s Written For Both Humans And Machines. These two are not mutually exclusive but are complementary and inseparable. In the context of modern search engine optimization, high-quality content must first attract and engage readers, meet their needs, answer their questions, and provide valuable information. After all, real traffic and conversions come from user approval. Only content that resonates with the audience and sparks interest can prompt readers to stay, click, and even share. However, if content solely caters to users while ignoring Google Search Engine’s rules, it will be difficult to achieve good rankings in fierce competition. The Google Search Engine relies on algorithms and GoogleBot (Crawler) Programs to understand and index web pages, ensuring that users receive the most relevant results quickly when searching. Therefore, SEO content must also consider how machines “read” it, by applying proper keyword placement, optimized heading structures, metadata settings, and internal linking strategies to help search engines better crawl and understand the core information of a page.

In practice, content creators need to find a balance between the two. They must skillfully incorporate keywords and technical optimization elements without compromising the reading experience. One could say that the art of SEO lies in this balance—it is a "dual communication" between humans and algorithms, needing to resonate with people while also catering to logic. Only when the content excels in both aspects can the ultimate goal of search engine optimization be truly achieved.

4、How the Google Search Engine Works

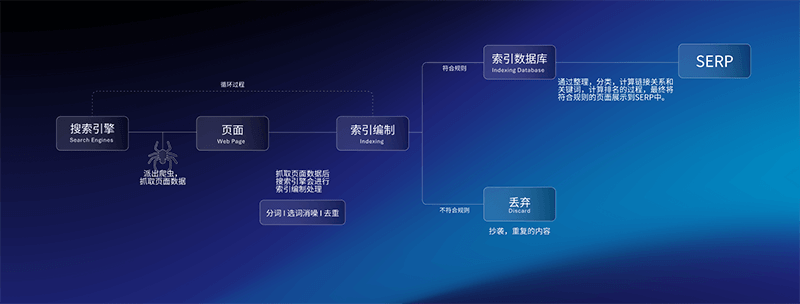

The example image above illustrates how the Google search engine works. This process forms a continuous cycle, where the Google search engine constantly crawls new data and updates its index to ensure the timeliness and accuracy of search results. The operation of the Google search engine can be broken down into the following key steps:

- Search Engine Crawls Page Information: Search engines deploy web crawlers (such as GoogleBot) to gather webpage data. These crawlers automatically traverse the internet, collecting content information from webpages to ensure that the search engine has the most up-to-date data.

- Search Engine Processes Page Information: The data collected by the web crawlers is sent to the search engine for processing. The content of the webpage includes text, images, links, and more, and the crawler attempts to capture as much usable information as possible from the page for further processing.

- Search Engine Indexing: The data collected from the pages enters the indexing stage. This phase includes tokenization, keyword selection, noise reduction, and deduplication, among other processes, so that the search engine can efficiently understand and store the webpage content. The goal of indexing is to structure large amounts of webpage data, making it easier and faster to retrieve.

- Search Engine Index Database: The completed index is stored in the index database. This database organizes and categorizes the data, calculates link relationships, and applies ranking algorithms. Content that meets the criteria is retained, allowing search results to be presented quickly when users search.

- Discard: Content that does not meet indexing rules, such as plagiarized or duplicate webpages, is discarded to prevent it from appearing in search results. This step ensures the quality of search results and prevents low-quality or irrelevant content from interfering with the user experience.

- SERP (Search Engine Results Page): Ultimately, the content that has been indexed and filtered will be displayed on the Search Engine Results Page (SERP). After users enter a keyword, the search engine will match it against data in its index database and display the most relevant results.

5、Google Search Engine Algorithms and Signals

There is a close relationship between signals and algorithms, as they can influence each other and together form key parts of many systems and processes. In many cases, algorithms rely on signals to make decisions and perform actions. An algorithm refers to a set of rules used in a search engine to process and rank web pages, while signals are the data and features used to measure the quality and relevance of a webpage. These signals (information) are fed back to the algorithm. When a search engine receives a user's query, it uses algorithms to analyze information such as the webpage content, link structure, and user behavior. These pieces of information are signals. The algorithm uses these signals to determine which web pages are the most relevant and ranks the search results accordingly. Therefore, the search engine’s algorithm relies on signals to assess the relevance and quality of a webpage, while signals are the input data for the algorithm. Together, they work to enable the search engine to provide the most relevant search results for users. Below are the key algorithms and signals of the Google search engine:

(1) Google search engine’s core algorithm

PageRank Algorithm

PageRank was developed in 1997 by Google founders Larry Page and Sergey Brin. It was designed to evaluate the quality and quantity of page links. Along with other factors, this score determines a page’s position in search engine rankings. Since 2013, PageRank has no longer publicly disclosed official data on domain authority, and it has since remained a hidden algorithm within Google’s internal system. Currently detectable metrics such as DA, PA, DR, and UR are results derived from third-party SEO data analysis tools that reference the former official algorithm. Each tool has slightly different evaluation parameters for authority, which explains why there are variations in the authority scores assessed by different tools. The primary function and operating mechanism of the PageRank algorithm are as follows:

①The main function of PageRank algorithm:

- SERPs Click-Through Rate: The higher the natural click-through rate of Google search results, the higher the page's ranking typically is.

- Link Voting: The PageRank algorithm is based on the principle of "link voting," where a webpage votes to support the importance of another page by linking to it.

- Link Quality: Not all links carry the same weight. Links from high-quality, authoritative pages are more valuable than those from low-quality pages.

- Link Quantity: In addition to link quality, the quantity of links is also a factor. More external links generally improve a webpage's ranking in Google search results.

- Internal Links: Internal links are also considered important. While the voting weight of internal links is not as high as that of external links, they still play a role in keyword voting.

②The operating mechanism of PageRank algorithm:

- Link Authority Transfer (Link Juice Transfer): The PageRank algorithm measures the authority and importance of web pages through the transfer of authority, also known as “Link Juice.” When one webpage links to another, it passes part of its own authority to the linked page, helping search engines determine the value of that page.

- Recalculation Cycle: PageRank values are not static; they are recalculated periodically to reflect the latest link relationships.

RankBrain algorithm

RankBrain is one of the core algorithms of the Google search engine, based on machine learning technology. Its purpose is to help Google process search queries more intelligently, especially those that are new or uncommon. It is able to understand the relationship between user search intent and keywords, enhancing the relevance and accuracy of search results. The main functions and operation mechanism of the RankBrain algorithm are as follows:

①The main functions of the RankBrain algorithm:

- Understanding Search Intent: The ability to analyze the true needs behind a user's search, not just relying on keyword matching.

- Handling Unfamiliar Queries: For new or uncommon search terms, RankBrain can infer relevant search results based on existing data.

- Optimizing Search Result Ranking: By learning from user clicks and interactions, search result rankings are continuously adjusted to improve user experience.

- Enhancing Semantic Understanding: Better understanding of synonyms, phrase structures, and their context to provide more logically accurate answers.

②The operating mechanism of RankBrain algorithm:

- Data Analysis and Learning: Collecting large amounts of search data and training machine learning models to understand the relationships between different search terms and webpages.

- Feature Matching: When processing search queries, RankBrain analyzes the features of the keywords and looks for content that is semantically related.

- Dynamic Ranking Adjustment: Based on user behavior data, such as click-through rate and time spent on a page, search result rankings are optimized in real-time to ensure more relevant content appears first.

- Self-Optimization: RankBrain constantly learns new search patterns and automatically improves the algorithm's performance to adapt to changing user search behavior.

Panda Algorithm

The Panda algorithm is an important search ranking update launched by Google in 2011, aimed at penalizing low-quality content websites and boosting the rankings of high-quality content. It primarily determines rankings by evaluating the quality of a website's content, encouraging original, in-depth, and user-value-driven content, thereby improving the overall search results experience. The main functions and operation mechanism of the Panda algorithm are as follows:

①The main functions of the Panda algorithm:

- Lowering Rankings for Low-Quality Websites: Reducing the visibility of websites with duplicate content, plagiarism, keyword stuffing, excessive ads, etc., in search results.

- Promoting High-Quality Content: Encouraging original, in-depth, informative, and user-helpful pages to achieve higher rankings.

- Fighting Content Farms: Addressing websites that generate traffic through the massive accumulation of low-quality content, reducing their weight in search results.

- Improving User Experience: By filtering quality content, the accuracy and efficiency of users' ability to find information through search engines are enhanced.

②The operating mechanism of Panda algorithm:

- Content Quality Evaluation: Scoring website content based on originality, depth, readability, relevance, and other factors.

- Quality Scoring Model: Using machine learning models to classify high-quality and low-quality content, forming a "quality score" that impacts a website's ranking in search results.

- Site-Level Impact: Panda evaluates not only individual pages but also considers the overall content quality of the entire website. Too many low-quality pages can impact the website's overall ranking.

- Regular Updates: Initially released as periodic updates, Panda has since been integrated into Google's core algorithm, having a real-time impact on search rankings.

- User Signal Reference: Combining user behavior data (such as bounce rate, time on page, etc.) to further verify the actual value of a page's content.

Penguin algorithm

The Penguin algorithm is a search ranking update launched by Google in 2012, primarily aimed at combating websites that manipulate search rankings using Black Hat SEO techniques, especially targeting unnatural external links (backlinks) and over-optimization practices. Its core goal is to enhance the fairness of search results by ensuring that website rankings are based on genuine content quality and user value, rather than achieved through manipulative tactics. The Penguin algorithm emphasizes the naturalness and quality of backlinks, encouraging websites to focus on content development and user experience, and to avoid gaining ranking advantages through unnatural methods. The main functions and operating mechanisms of the Penguin algorithm are as follows:

①The main functions of Penguin algorithm:

- Combat Unnatural Links: Target backlinks acquired through unnatural means such as paid links, Link Farms, and link exchanges, reducing their impact on rankings.

- Penalize Over-Optimization: Lower the rankings of websites that engage in SEO manipulations such as keyword stuffing and excessive optimization of Anchor Text.

- Increasing the Value of Natural Links: Encouraging websites to naturally gain backlinks through high-quality content rather than relying on manipulative techniques.

- Real-Time Monitoring of Link Quality: Later versions of the Penguin algorithm were integrated into Google's core algorithm to assess a website's link quality in real time, reflecting in search rankings immediately.

- Precise Penalties: Instead of penalizing an entire website, penalties are now applied more precisely to specific pages or links.

②Operation mechanism of Penguin algorithm:

- Link Quality Analysis: The algorithm detects the sources of external links (backlinks) to a website and evaluates whether the links are relevant, authoritative, and natural.

- Anchor Text Evaluation: The distribution of anchor text in backlinks is checked to identify whether there is any unnatural keyword stuffing or over-optimization.

- Bad Link Identification: Identifying low-quality or manipulative links, such as link farms, spammy directories, and irrelevant forum comments, and reducing their weight.

- Real-Time Algorithm Updates: Since the release of Penguin 4.0, the algorithm has been integrated into Google's core algorithm, processing link data in real-time and reflecting changes quickly in search rankings.

- No Direct Penalty, Just Ignored: The latest version no longer directly penalizes violating links but instead "ignores" their influence, reducing their positive impact on rankings.

- Encouraging Self-Cleanup: Website administrators can use the Disavow Tool to reject bad links or actively clean up unnatural links, helping to restore the site's ranking.

(2) Google search engine signals

Google Search Engine Signals refer to the various factors and data that the algorithm considers when evaluating web page rankings. These signals help Google assess the relevance, authority, and user experience of a webpage in order to provide users with the most accurate and valuable search results. Search engine signals can be categorized into multiple dimensions, including content quality, domain, Keywords, social media, external links (backlinks), user behavior (user experience), technical performance, and page structure, among others. For example, high-quality original content, valuable external links, good click-through rates, page loading speed, and mobile responsiveness are all important signals that influence rankings. In addition, Google also considers technical factors such as page security, HTTPS encryption, URL structure, and internal link optimization. Search engine signals do not function independently but are interconnected and comprehensively evaluated through complex algorithmic models to ensure that search results accurately meet user queries. As technology continues to evolve, Google is constantly adjusting and refining the weight of these signals to adapt to changes in user search behavior and the content ecosystem. The types and details of Google Search Engine Signals are as follows:

Domain Signals

Among the many ranking signals in Google's search engine, the Domain Name signal is one of the factors that affect a website's search performance. Domain authority refers to the overall influence and credibility of a domain in search engines, which is typically influenced by multiple aspects such as historical site performance, the quality and quantity of external links, and content quality. A domain with high authority often indicates that the website has greater credibility and authority within its industry, which can help it achieve better rankings. Domain age is also a signal worth noting. Although Google has officially stated that its impact is limited, websites that have been active for a long time and consistently maintain high-quality content are usually considered more stable and trustworthy, thereby gaining some ranking advantages. Additionally, the transparency and consistency of domain registration information are also evaluated. Public and credible registration details can enhance the trust of search engines in the website, while frequent changes or opaque privacy-protected information may raise concerns. Overall, the domain name signal plays a supporting role in Google's search algorithm and works together with other signals such as Content Quality and user experience to determine a website’s performance in search rankings.

Keyword Signals

In Google’s search engine ranking mechanism, keyword signals are one of the important factors for evaluating the relevance of a webpage. The frequency and density of keywords can help search engines determine how well a webpage matches the user’s search intent. Proper keyword density can reinforce the focus of the page's theme and improve its visibility in related searches, but overusing keywords can be seen by the algorithm as keyword stuffing, which may negatively impact the ranking. In addition to frequency and density, the specific placement of keywords on the page also plays a crucial role. Incorporating keywords strategically into the title (Title Tag), body content, heading tags (such as H1, H2, H3), and URL can enhance search engines’ understanding of the page’s theme and improve its ranking potential in related search results. Especially in the title and URL, keywords help both search engines crawl and index the page while attracting user clicks, further increasing the page’s click-through rate and exposure. However, Google’s algorithm places more emphasis on natural and fluent content expression, stressing the balance between reasonable keyword placement and content quality, avoiding mechanical keyword stuffing strategies. As algorithms continue to evolve, keyword signals are no longer the sole determinant of ranking but instead work in conjunction with other factors like content relevance and user experience to ensure higher-quality search results for users.

User Experience (UX) Signals

In Google's search ranking algorithm, user experience (UX) signals are an important reference factor for measuring webpage quality and user satisfaction. Page loading speed is one of the key metrics affecting user experience. Fast-loading pages effectively reduce bounce rates, increase user engagement, and result in better search rankings. Mobile-friendliness is also critical. With the continued growth of mobile search traffic, Google emphasizes a website's ability to adapt to different screen sizes to ensure that mobile users have a smooth browsing experience. Click-through rate (CTR) reflects how much interest users have in a particular page in the search results. A higher CTR usually indicates that the page's title and description are attractive, which helps improve rankings. Dwell time measures how long users stay on a page after clicking a search result. A longer dwell time typically indicates that the page content aligns well with the user's search intent. Related to this is the "Pogo Stick" phenomenon, where users click on a search result, quickly return to the search page, and click on another link. This may suggest that the page failed to meet the user's needs, potentially negatively impacting the ranking.

In addition, a flat website structure helps reduce the number of clicks users need to make when finding information within the site, improving navigation efficiency. Breadcrumb navigation provides clear path indications, helping users quickly understand the hierarchical relationship of the current page within the website, thereby enhancing the site's usability. User comments and site reputation are also important UX signals. Positive user feedback and a good brand reputation can enhance search engines' recognition of the website's credibility. Finally, Google also considers Chrome bookmark data. Pages that are frequently bookmarked by users may be seen as having higher value, thus indirectly influencing rankings. Overall, user experience signals not only directly relate to user satisfaction but also serve as an important basis for Google to measure webpage quality and optimize search results.

Link signal

In Google's search ranking algorithm, link signals are one of the important factors for measuring a webpage's authority and content relevance. The quality and quantity of external links (backlinks) have a significant impact on rankings. High-quality backlinks typically come from reputable and authoritative websites, and are seen as a "vote" for the target page's content, effectively improving its visibility in search results. However, quantity is not the only standard; the relevance of the link, the authority of the source website, and the naturalness of the link are equally important. Low-quality or manipulated backlinks may be identified by Google's algorithm and can even lead to ranking penalties. The reasonableness of the internal linking structure helps search engines crawl and index website content more efficiently. A good internal link layout not only optimizes the crawling path but also helps distribute page authority (Link Juice), enhancing the ranking potential of important pages. Additionally, it improves the user browsing experience by making it easier for users to find more related content on the site, increasing page dwell time and interaction rates. Outbound links' quality and quantity are also an important part of link signals. A moderate number of outbound links pointing to high-quality websites can provide users with additional reference information, enhancing the webpage's content depth and authority. Google encourages a natural and reasonable outbound linking strategy, avoiding an excessive number of low-quality or irrelevant links that may negatively affect the site's credibility. Overall, link signals play an important role in Google's ranking system by connecting pages, transferring authority, and validating content value.

Technical Signals

In Google's search engine ranking algorithm, technical signals are core elements that ensure a website can be efficiently crawled, correctly indexed, and provide a good user experience. The structure and code quality of a website directly affect the crawling efficiency of search engine bots and the page loading speed. A clear structure and simple code help improve the search engine's understanding of the page content, thereby enhancing ranking performance. At the same time, whether the website uses a secure HTTPS protocol is also an important ranking signal. HTTPS encrypts data transmission to ensure user information security, and Google has explicitly included it in its ranking algorithm, encouraging websites to adopt a more secure online environment. Proper outbound dofollow link settings help search engines effectively recognize the weight transfer relationship between pages, enhancing the page's credibility. HTML errors and compliance with W3C validation are also crucial. Standardized code not only helps reduce page loading errors but also enhances compatibility across devices and browsers, improving the user experience. The Robots protocol (robots.txt) is used to control the access permissions of search engine bots to different parts of the website. Proper configuration can effectively guide search engines to focus on core content and avoid unnecessary crawling that wastes resources.

In addition, a sitemap (Sitemap) is an important navigation tool that helps search engines quickly discover and index all the important pages of a website, especially useful for websites with complex structures or newly launched sites. An SSL certificate is the foundation of the HTTPS protocol. It ensures the security of data transmission through encryption technology, enhancing user trust and the website's security rating. Technical signals play a fundamental and critical role in SEO optimization, providing a solid foundation for the website's accessibility, security, and search engine friendliness.

Content quality signals

In Google's ranking algorithm, Content Quality Signals are key factors in determining whether a webpage deserves to be displayed at the top of search results. Among these, E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) is an important standard for evaluating content quality. Content that demonstrates real experience, professional knowledge, authoritative endorsements, and trustworthy sources is more likely to gain recognition from search engines, especially in sensitive fields such as health and finance. Originality and uniqueness are also core elements. Google tends to display content that is unique and offers original value, rather than simple copies or compiled information. Using canonical tags can effectively prevent issues with duplicate content, helping search engines identify authoritative pages and ensure SEO weight is concentrated on the right pages. Additionally, the depth and comprehensiveness of content are key indicators of quality. Articles that delve deeply into a topic and provide thorough information typically have a ranking advantage over superficial content.

The usefulness and value of content directly affect user experience, and Google encourages the publication of content that can truly solve users' problems or provide useful information. Proper use of bullet points and numbered lists can improve readability, helping users quickly access key information and enhancing the structured display of the page. Citing reliable references and sources not only increases the content's authority but also helps improve the search engine's trust. The freshness of content and its update frequency are also important quality signals. For time-sensitive topics such as news, technology trends, etc., Google prefers to display the most recent and relevant information. Even for evergreen content, regular updates can maintain its vitality in search engines, ensuring the accuracy and contemporary relevance of the information. Content quality signals determine whether content holds dual value for both search engines and users, making it a core element in SEO optimization that cannot be overlooked.

Social signals (mentions)

In Google's ranking mechanism, social signals (also known as mention signals) are not direct ranking factors, but they play an important supporting role in enhancing website authority and content credibility. Social signals mainly manifest in the sharing and discussion of content on social media. When an article or webpage is frequently shared, liked, or commented on across multiple platforms, it indicates that the content has high user approval and viral value. This widespread interaction can indirectly increase the page's exposure, attracting more organic traffic, which in turn has a positive impact on SEO. Additionally, a brand's reputation and activity on social platforms are also important signals. If a brand has a stable fan base, active interactions, and a good reputation on social media, search engines will consider the brand to have high credibility. This online reputation not only helps build user trust but also contributes to increasing the overall authority of the website, thereby influencing search rankings. Verifying the authenticity and credibility of a webpage or content through social media and other channels is also a key dimension of social signals. When content is mentioned or cited by authoritative media, well-known industry experts, or certified accounts, search engines tend to consider this information more reliable. This "endorsement effect" not only enhances the credibility of the content but also helps improve the website's visibility in search results. Social signals reflect real user feedback and approval of content, making them an effective reference for measuring webpage value.

6、Google Search Engine Penalties

Google search engine not only has the positive algorithm signals listed above but also includes penalty signals for detecting and penalizing cheating. When a website engages in manipulative behavior to try to manipulate Google search rankings, it may face penalties from the Google search engine. These cheating behaviors include, but are not limited to, keyword stuffing, hidden text and links, purchasing backlinks, mirror websites, and automatically generating low-quality content. Once detected by Google, the website may experience ranking drops, or in severe cases, some pages may be removed from the index, or the entire site may be completely excluded from search results. Google’s algorithm updates (such as Penguin and Panda) and manual review teams regularly check for violations to ensure the fairness and high quality of search results. After being penalized, a website must correct the issues and submit an appeal to potentially restore its rankings. The types of penalties, penalty results, penalty signals, and self-check methods for penalty outcomes are as follows:

(1) Types of Google search engine penalties

Manual Penalty

A manual penalty, as the name suggests, is a penalty decision made by a Google employee after reviewing a website. Google will send a notification to the site owner through the Webmaster Tools, outlining the general reason for the penalty and providing URL examples that violate Google’s quality guidelines. The site owner needs to fix the issues that violate these guidelines and then submit a re-evaluation request through the Webmaster Tools. Google employees will manually review the website again, and if it meets the requirements, the penalty will be lifted.

Algorithmic Penalty

If there is no notification in Google Webmaster Tools but the significant drop in rankings and traffic corresponds with the release date of an algorithm update, it is usually a result of an algorithmic penalty. Algorithmic penalties cannot be manually lifted. The only way to recover is to clean up the violations on the website and wait for the algorithm to recalculate the rankings.

(2) Google Search Engine Penalty Results

Mild

For violating websites, Google's penalty measures typically include domain devaluation, page devaluation, and ranking reduction. Domain devaluation refers to the weakening of the entire website's authority, leading to a significant drop in the ranking and traffic of all pages, affecting the overall search visibility of the website. Page devaluation is a penalty imposed on specific violating pages, causing those pages to drop in search rankings and reducing their exposure. Ranking reduction is the most common form of penalty, where violating pages are moved to lower positions in search results, resulting in a significant decline in click-through rates and traffic. Although these penalties do not lead to the complete removal of the website from the index, they directly impact the website's traffic and search performance, forcing webmasters to correct violations to restore rankings.

Severe

For websites that violate guidelines, Google's severe penalties are typically reflected in a significant drop in keyword rankings, with various forms and far-reaching consequences. Partial Keyword Penalty is one common scenario, usually occurring when the website’s core keywords are penalized, while secondary keywords and long-tail keywords remain unaffected. This type of penalty often results from the over-optimization of external links (backlinks) or the excessive accumulation of spam links. Especially, highly concentrated anchor texts are considered unnatural linking behavior, leading to a dramatic decline in the rankings of main keywords. Comprehensive Keyword Ranking Decline is a more serious form of penalty, involving a significant drop in the rankings of all keywords on the website, sometimes pushing them from the first page of search results to dozens of pages behind. Unlike normal ranking fluctuations, this kind of comprehensive drop is usually not due to algorithm updates or competitive pressure, but rather a clear penalty signal from the search engine, indicating that the website has committed serious violations.

(3) Google search engine penalty signal

- Low-Quality Link Types: If a large portion of external links (backlinks) comes from a single source (e.g., forum profiles, blog comments), they may be considered spammy links, indicating a low-quality website.

- Irrelevant 301 Redirects: Redirecting old URLs (that have earned backlinks and referring domains) to new pages unrelated to their content (e.g., redirecting old blog posts to the homepage) may be seen by Google as a soft 404, failing to effectively pass link value.

- Broken Links (404 Errors): A website with a significant number of broken links (404 error pages) is considered poorly maintained, lowering user experience, which can subsequently affect rankings.

- Too Many Affiliate Links: A website containing an excessive number of affiliate marketing links (Affiliate Links) is likely to be considered overly commercialized, which can negatively impact the site's authority and trustworthiness.

- Lost External Links: A continuous decline in the number of backlinks may signal a decrease in a website's popularity, leading to a significant drop in rankings.

- Excessive Link Exchanges: Frequently exchanging links with other websites, especially for links with no real value, is considered a violation by Google and may trigger penalties.

- Pop-up or Disruptive Ads: According to Google's official guidelines, frequent pop-up ads or ads that disrupt user experience are considered signs of a low-quality website, potentially affecting page scores.

- Over-Optimization of Keywords: Keyword stuffing, repeating keywords excessively in title tags, or over-optimizing page content are recognized by Google as unnatural SEO practices, which can lower page rankings.

(4) Self-checking method for Google search engine penalty results

Self-check Method 1 for Search Engine Penalty: Use the "site" command to query the domain

Using the Site: Operator to query a domain is a commonly used self-check method to determine whether a website has been penalized by search engines. By entering site:yourdomain.com (replace yourdomain.com with the actual domain) into the Google search bar, you can view the number and range of pages indexed by Google for that website. If the search results are empty or the number of indexed pages has significantly decreased, it may indicate that the website has been de-ranked or removed from the index by the search engine.

Self-check Method 2 for Search Engine Penalty: Search for the Website Name

Another effective self-check method to detect if a website has been penalized by the search engine is to search for the website’s name. Simply enter the brand name or company name in the Google search box and check if the website appears in the search results. If the website does not appear on the first page of results for the brand name search, especially if the official website is not prioritized, it may indicate that the website has been penalized or demoted by the search engine.

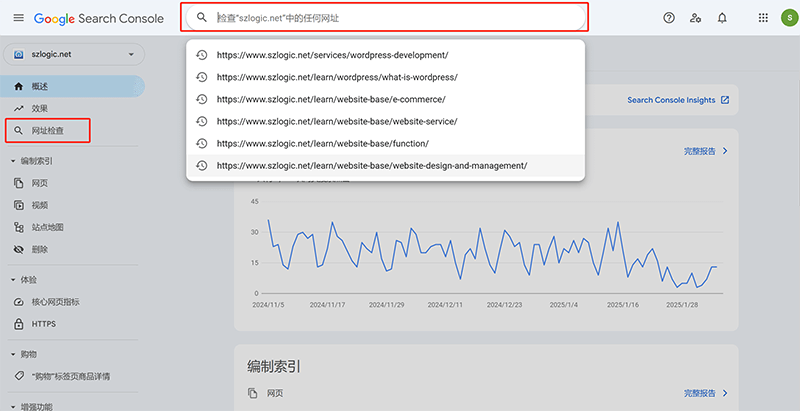

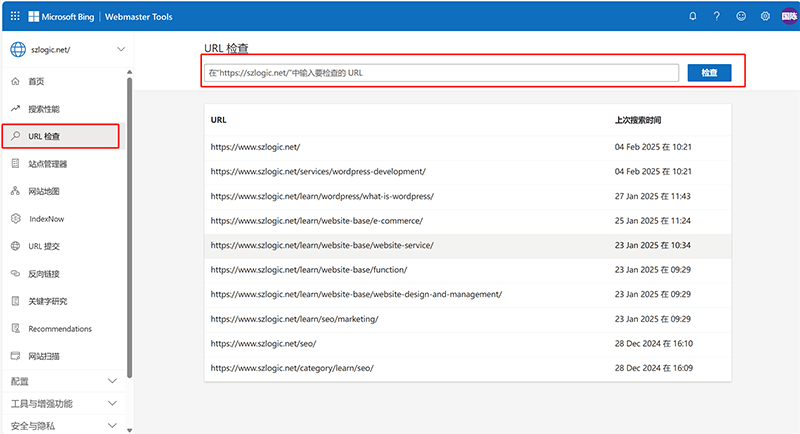

Self-check Method 3 for Search Engine Penalty: Check the Number of Indexed Pages in Google Search Console

Here is the complete English translation with all HTML tags preserved and inner Chinese content translated into English with capitalized tag text: --- Using the Google Search Console (GSC) to check the number of indexed pages is a crucial self-diagnosis method for detecting whether a website has been penalized by search engines. After logging into GSC, go to the "Coverage" or "Pages" report to visually view the number of indexed pages and changes in indexing status. If a sudden and significant drop in indexed pages is observed, or a large number of pages are marked as "Excluded," it may be a sign of algorithmic penalties or technical issues on the site. In addition, GSC provides detailed information about indexing errors, crawl anomalies, and manual actions, helping webmasters quickly identify problems. Regularly monitoring this data can help detect potential risks early and take corrective measures to ensure the website's visibility in search engines.

Self-check Method 4 for Search Engine Penalty: Comprehensive Tracking of Keyword Rankings

Here is the full English translation of your text with all HTML code tags preserved, including translated Chinese content within the tags (with capitalized tag text as requested): --- Comprehensively tracking keyword rankings is one of the important self-check methods to determine whether a website has been penalized by search engines. By using keyword ranking tracking tools such as Ahrefs, SEMrush, Moz, etc., webmasters can continuously monitor the ranking fluctuations of core keywords in search engines. If certain important keywords suddenly experience a significant drop in ranking—especially if they fall to a much lower position or drop from the first page to subsequent pages—it may indicate that the website has been penalized by search engines. Compared to normal ranking fluctuations, a penalty-induced drop is usually more drastic and persistent. By regularly reviewing ranking trends, webmasters can detect potential penalty risks in a timely manner, analyze the reasons, and take corrective actions.

Ⅱ、Technical SEO

Technical SEO is a key factor in improving a website's ranking in Google search results and involves a variety of technical optimization strategies. First, the proper use of HTML Tags (such as Title, Meta Description, H1, Alt, etc.) can help search engines better understand the content of a page, thus improving indexing efficiency. In addition, integrating JSON-LD Format Structured Data (Schema Code) provides a clear data structure to search engines, increasing the chances of rich search result features (such as star ratings, FAQs, etc.). In terms of site architecture, a well-designed Website Structure not only enhances user experience but also allows search engine crawlers to efficiently index content. It is recommended to use a clear internal link hierarchy and breadcrumb navigation. At the same time, ensuring the site returns the correct HTTP Status Codes (such as 200, 301, 404, etc.) is crucial to help search engines properly identify page status and avoid the negative impact of broken links or duplicate content.

From a performance perspective, the Page Size Should Be Kept Under 15MB, as Google's crawler may not fully fetch pages exceeding this limit, which can affect indexing coverage. In addition, enabling an SSL Certificate (HTTPS) has become one of the key factors in Google's ranking algorithm, ensuring the security of data transmission and enhancing user trust. Finally, by creating and maintaining a high-quality XML Sitemap, you can explicitly submit your website’s important pages to Google, helping search engines discover new content and accelerate index updates. By comprehensively applying these Google technical SEO strategies, you can effectively improve your website’s search visibility and strengthen its ranking advantage in highly competitive search results. The specific strategies required for Google technical SEO are as follows:

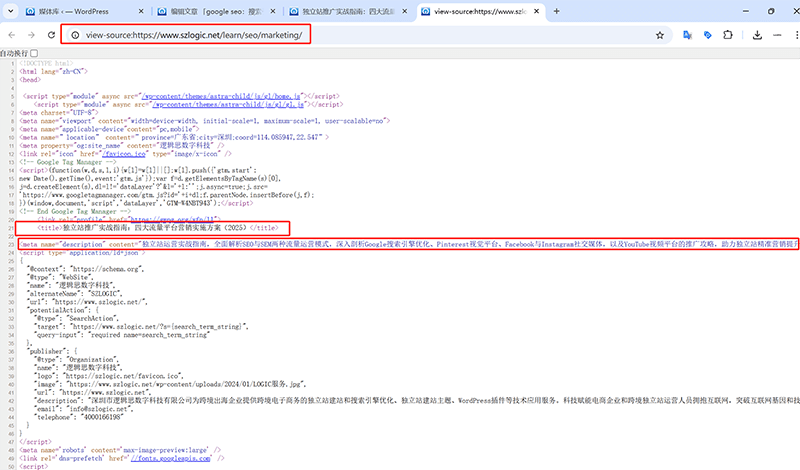

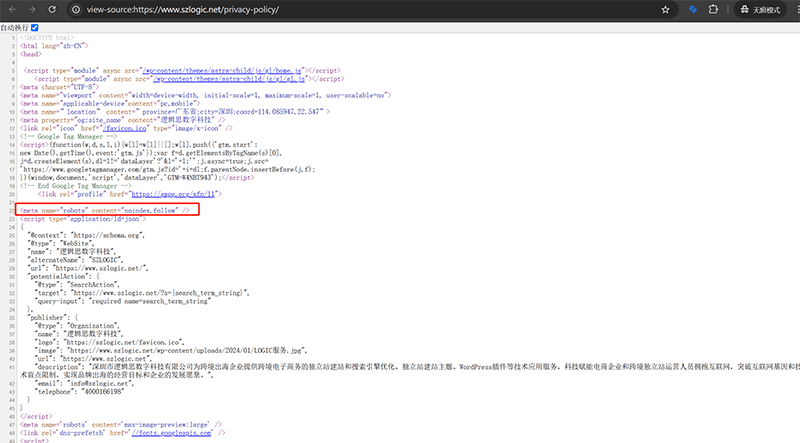

1、TDU Tags

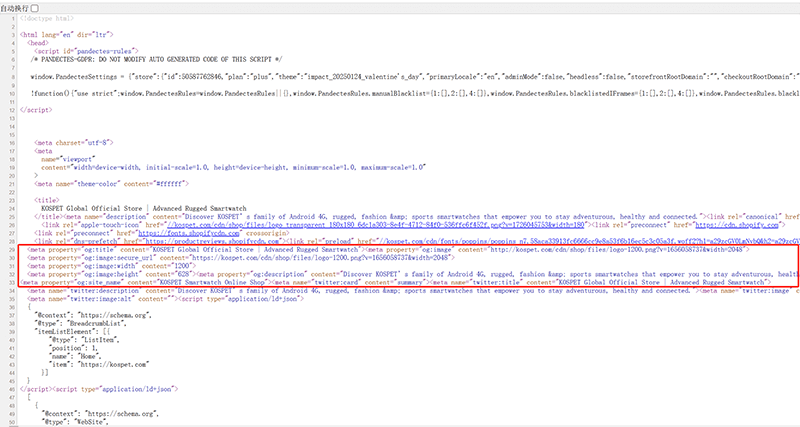

As indicated by the red box annotation in the front-end code example of the page above, TDU stands for the page’s Title, Description, and URL. When the page's keywords tag still played a role in SEO, it was also referred to as TDKU. However, on September 21, 2009, Google officially announced that it would no longer use the HTML meta keywords tag as a ranking factor. This information was released by Google's former Product Manager Matt Cutts through an Official Blog, clearly stating that Google's search algorithm does not reference the meta keywords tag to determine page rankings. As a result, meta keywords has essentially lost its practical value, and now most SEO professionals have simplified TDKU to TDU.

(1)title

The Importance of the Title Tag for Google SEO

The title tag is a crucial element in SEO, and its importance can be ranked as the highest. It is where we input the theme name (title) and strategically place core SEO keywords, directly affecting the page's ranking in Google search results.

Technical Implementation of the Page Title

The blogger’s Logic Digital Technology is a company focused on the full ecosystem of WordPress technology development. It integrates WordPress Web Design and development, and has been deeply engaged in research and work related to the backend technology stack based on PHP. In actual development, the output of front-end page information, including title data, is implemented through PHP’s dynamic data processing and rendering. Outputting information on the front-end page is a typical implementation scenario of backend programming technology. In the WordPress environment, PHP calls functions within the theme templates (such as wp_title() or the_title()) to output the title. To improve development efficiency and code maintainability, developers can also combine custom functions, Hooks, and Filters to flexibly adjust the output logic of the title.

Title Tag Writing Guidelines

When performing Google SEO optimization, the page’s title tag plays a crucial role. It is not only a core element for search engines to understand the page content but also a key factor in attracting user clicks. Therefore, writing a high-quality title requires following certain guidelines to improve both the page’s ranking and click-through rate. First, the page title should Align With User Search Intent. This means that when crafting the title, you must think from the user's perspective and understand what information they truly want when searching for a specific keyword. The title should accurately summarize the core content of the page, ensuring users can quickly determine whether it meets their needs when viewing the search results. Secondly, Including Core Keywords is essential for enhancing SEO effectiveness. Integrating highly relevant core keywords into the title helps search engines better understand the theme of the page, thereby improving its ranking in related search results. However, the inclusion of keywords should be natural and smooth, avoiding keyword stuffing, which can harm both user experience and search engine evaluation. In addition to satisfying search intent and keyword optimization, the title should also Attract User Clicks. A click-worthy title often sparks user interest, arouses curiosity, or meets a specific need. You can enhance title appeal by adding emotionally engaging words, numbers, unique selling points, or compelling descriptions, thereby boosting the click-through rate. Finally, Keeping The Title Length Within 29 Characters helps ensure it is fully displayed in search results without truncation, which could negatively affect readability. Although the character display limit may vary across different devices, keeping the title concise, clear, and on-point significantly improves readability and visual impact, enhancing overall SEO performance.

(2)description

The Importance of the Description for Google SEO

The description, also known as the meta description, is an HTML meta tag used to briefly summarize the content of a webpage. However, Google does not use the meta description as a direct ranking factor for keywords. Despite this, a well-crafted custom meta description still holds significant value, even though it may not always be 100% adopted by the search engine for display in search results (SERP). In some cases, Google may automatically extract what it deems the most relevant text snippet from the page content based on user search intent to display as the description. Even so, a well-structured and engaging meta description still has a high chance of appearing in the SERP, helping users quickly understand the page content and increasing click-through rates.

Technical Implementation of the Page Description

The technical implementation of the description is similar to the title tag. It is typically developed using PHP and is dynamically generated through WordPress built-in functions. In the theme's functions.php or header.php file, code can be written to add a custom meta description input field in the backend, dynamically rendering the meta description to the frontend of the page. This approach not only improves SEO control over the page but also allows flexible management of different pages' meta descriptions.

Meta Description Writing Guidelines

The page's Description (Meta Description) is used to briefly summarize the content of the webpage. Writing an effective meta description requires following certain guidelines. First, control the character length. It is recommended to keep it within 155 English characters or 75 Chinese characters to ensure it is fully displayed in the search results page (SERP) without being cut off, which could negatively affect the reading experience. In addition, the meta description should closely focus on the core content of the page, accurately conveying the page's value and theme, helping users quickly understand the information. Properly embedding core keywords can improve search relevance. While it won’t directly affect ranking, it helps attract the attention of search users. The language of the meta description should be engaging, using concise and persuasive wording to spark user interest and avoid keyword stuffing or vague descriptions.

(3)URL

URL weight for Google SEO

The page's URL (Uniform Resource Locator) holds certain weight in Google SEO. While its influence is not as significant as core factors such as content quality and backlinks, properly optimizing the URL structure can still have a positive impact on both search rankings and user experience. First, a concise and clear URL is more beneficial for search engine crawling and indexing. A well-defined path structure helps Google better understand the page hierarchy and content theme, thereby improving the page's visibility in relevant searches.

Technical Implementation of Page URL Function

The fixed URL format is a native feature of the WordPress system, and it can be set in the WordPress site management dashboard under the "Settings" section in the left sidebar, specifically in the "Permalinks" option. Once the link format is fixed, you can customize the URL suffix in the article page management interface before publishing the page.

URL Writing Guidelines

Including core keywords in the URL can enhance the relevance signal of the page, helping search engines assess the alignment between the page content and user queries. While the role of keywords in the URL is relatively limited, in highly competitive search results, this small optimization may be the detail that influences rankings. Additionally, a concise URL can improve user click-through rates, avoiding long and complex parameters, making the link appear more trustworthy and professional. A good URL structure should also be logical and consistent, with recommendations to use hyphens (-) to separate words, and to avoid underscores (_) or meaningless characters. For dynamic URLs, it’s best to minimize redundant parameters, maintaining simplicity and readability. Overall, a well-structured, clear hierarchical URL with appropriate keywords not only aids SEO optimization but also enhances user experience and improves the overall accessibility of the website.

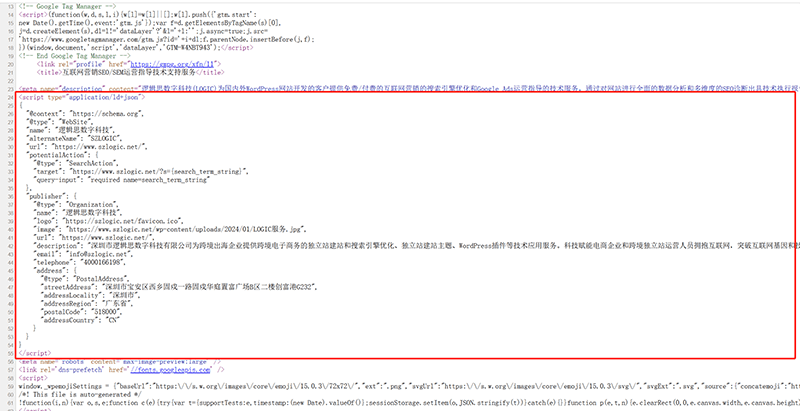

2、Property Tags

The meta property tag highlighted in the red box in the image above is the front-end code of the page. The meta property tag is related to the Open Graph (OG) protocol, which is used to optimize the way web content is displayed on social media platforms such as Facebook, Twitter, LinkedIn, etc. Although these tags do not directly affect Google search rankings, they play an important role in improving the website's click-through rate and user interaction, which in turn indirectly positively impacts SEO.

By adding meta tags such as meta property="og:title", meta property="og:description", and meta property="og:image" to web pages, websites can control the display of the title, description, and image when shared on social media platforms. Well-optimized Open Graph tags can ensure that the content displayed when users share a webpage is more attractive, thereby increasing click-through rates and share frequencies. Higher social interaction may bring more external traffic, further increasing page exposure and user visits. Although Meta property tags do not directly affect Google search rankings, they indirectly enhance the page's appeal on social platforms, boosting website traffic, which positively impacts search engine rankings. Furthermore, the frequency of user clicks and shares is considered a signal of user behavior, and search engines may evaluate website content's quality and relevance based on these signals, thereby influencing rankings. Details of each type of meta property tag and the technical implementation for outputting meta property tag information to frontend page code are as follows:

(1) Types of Meta Property Tags

1、<meta property="og:locale" content="页面语言标签" />

2、<meta property="og:title" content="页面标题">

3、<meta property="og:description" content="页面描述">

4、<meta property="og:url" content="页面URL">

5、<meta property="og:site_name" content="网页名称" />

6、<meta property="og:image" content="图像的URL">(2) Technical Implementation of Meta Property Tags

To implement these tags, a custom function can be created in the header.php file to dynamically generate the necessary meta property tags. These tags are usually inserted into the head section of the page using the wp_head hook. When a page is accessed, the function dynamically outputs the corresponding Open Graph tags—such as og:title, og:description, and og:image—based on the page content. Furthermore, you can customize tag values according to different page types (e.g., posts, homepage, category pages) to ensure each page contains accurate metadata.

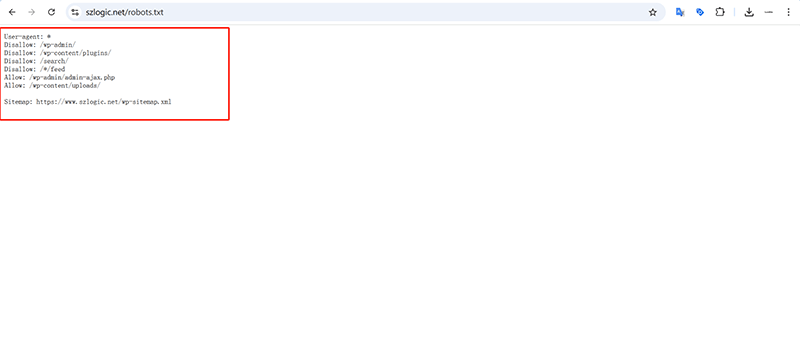

3. Robots Protocol (robots.txt)

The robots protocol, also known as robots.txt, is shown in the red box in the image above, which contains the content of the robots.txt file. The robots.txt file is written in a simple text language. It does not require a specific programming language, but rather follows a set of rules known as the "Robot Exclusion Standard". These rules are used to tell search engine crawlers which pages or parts of the content can be accessed and which pages should not be crawled.

(1) Writing Guidelines for Robots Protocol (robots.txt)

If you are using a WordPress website, you simply need to copy and paste the following text code into the appropriate section of the dynamically generated robots.txt file within the WordPress directory to replace the existing content. For other website platforms or frameworks, users can customize the configuration based on their directory structure to specify which paths crawlers are allowed or disallowed to access. In this context, "Disallow" indicates that crawling is prohibited, while "Allow" means crawling is permitted. By default, if no specific "Disallow" directives are written, all paths are assumed to be allowed. Therefore, as long as the disallowed directories are clearly defined, it is not necessary to explicitly write "Allow" directives.

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-content/plugins/

Disallow: /search/

Disallow: /*/feed

Allow: /wp-admin/admin-ajax.php

Allow: /wp-content/uploads/4、Sitemap (XML Sitemap)

The example image above shows an indexable XML sitemap in WordPress. Under normal circumstances, every time a standard page or post is published, its URL is automatically included in the XML sitemap and categorized accordingly based on the type of content. The XML sitemap plays a critical role in SEO as it helps search engines crawl and index website content more efficiently. A sitemap is a file that contains links to all important pages on a website, usually provided in XML format, and is designed to clearly present the site's structure and content to search engines. Through the sitemap, search engines can more easily discover and index content that might not be linked from other pages, thus improving the overall visibility of the site. Sitemaps are especially important for large or newly launched websites because they ensure that crawlers do not miss any critical pages. They can also inform crawlers about details such as the update frequency and priority of each page, enabling search engines to schedule crawling tasks more intelligently. The existence of a sitemap not only speeds up the indexing process of new pages but also improves crawling efficiency, helping optimize search engine crawling strategies, which can indirectly enhance rankings and traffic. Additionally, submitting the sitemap to Google Search Console helps better monitor the site’s indexing status and identify potential crawling issues.

5. SSL Certificate (https)

.png)

The pop-up marked with a red box in the image above indicates that the website has successfully installed the SSL certificate and is secure. In contrast, the browser will show a security warning if it is not installed. The SSL certificate (Secure Sockets Layer) provides encrypted transmission to ensure the security of data exchanged between users and the website. It works by converting the HTTP protocol to HTTPS, creating an encrypted connection channel that prevents data from being intercepted or tampered with during transmission. In SEO, SSL certificates have become crucial, as search engines (especially Google) have explicitly made HTTPS one of the ranking factors. Websites with an SSL certificate typically gain an advantage in search rankings. Search engines assess a site's security based on whether it uses the HTTPS protocol, and HTTPS sites are considered more reliable and secure, thus enhancing user trust. This trust is critical for SEO because search engines are more likely to recommend secure sites, particularly in scenarios involving sensitive information exchange or online transactions. Whether for user experience or search engine ranking, SSL certificates help establish greater credibility for websites. Additionally, enabling HTTPS prevents the site from being flagged as "not secure," which could lead users to leave the site, negatively impacting bounce rates and user engagement, ultimately affecting SEO performance. Therefore, installing an SSL certificate not only enhances website security but also helps improve search engine rankings, thereby boosting overall SEO effectiveness.

(1) Technical Implementation of SSL Certificate Installation

In WordPress, installing an SSL certificate and enabling HTTPS is typically done through the site settings in a Linux-based control panel. Most hosting providers offer a graphical interface that allows users to easily apply for and install an SSL certificate directly from the control panel, making the process simple and convenient. If you are not using a Linux control panel, you can also manually apply for and install an SSL certificate through the Linux command-line interface. This process generally involves several key steps. First, the user needs to log in to the server and ensure that Certbot is installed. Certbot is a commonly used tool that helps users obtain free SSL certificates through services like Let’s Encrypt. After installing Certbot, run the command sudo apt-get install certbot. Depending on the web server used, additional plugins may be required—for example, sudo apt-get install python3-certbot-apache for Apache or sudo apt-get install python3-certbot-nginx for Nginx. Once installation is complete, users can use the certbot command to apply for the SSL certificate.

Execute the command `sudo certbot --apache` (or `sudo certbot --nginx`, depending on the web server used), and certbot will automatically detect and configure the SSL certificate, completing the application and installation process. During this process, the user needs to provide a valid domain name and ensure that the DNS record for that domain is correctly pointed to the server's IP address. Once the certificate is successfully applied, certbot will automatically configure Apache or Nginx to enable HTTPS. Additionally, certbot offers an automatic certificate renewal feature, allowing users to set up a cron job to regularly check and update the SSL certificate, preventing security warnings from appearing due to expired certificates. After the SSL certificate is successfully installed and HTTPS is enabled, users also need to adjust the site settings in the WordPress dashboard, updating the website address (WordPress address and site address) to a URL that begins with "https." Furthermore, it is strongly recommended to automatically redirect HTTP requests to HTTPS to ensure that all traffic is securely transmitted. This can be achieved by modifying the web server's configuration files (such as Apache's `.htaccess` or Nginx's configuration file) to ensure that unencrypted traffic is redirected to the encrypted version of the site.

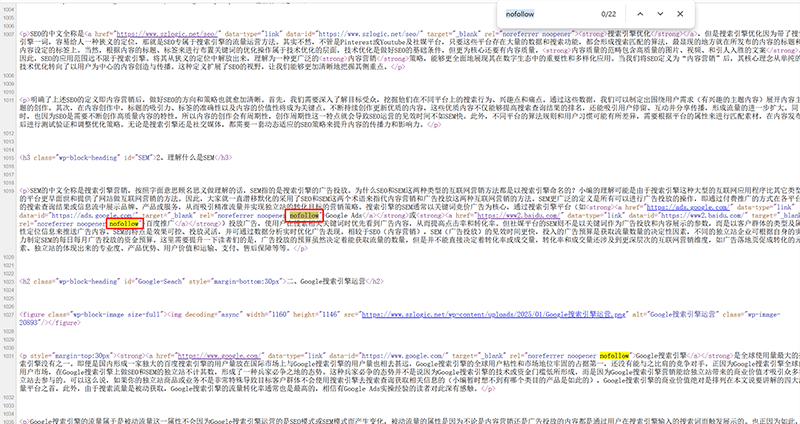

6、Nofollow and Dofollow

In Google SEO, nofollow and dofollow are two attributes used to instruct search engines on how to handle links on a page. They have different impacts on website rankings and crawler behavior, and understanding their roles is crucial for optimizing a site. Dofollow is the default setting, meaning that if the nofollow attribute is not explicitly applied, search engines will treat the link as a dofollow link. In other words, by default, Google will pass PageRank and other ranking signals through dofollow links. This allows websites to support the target pages they link to by passing authority, helping those pages rank higher in search results. Dofollow links are beneficial for SEO and are typically used for internal linking and for linking to important external resources.

In contrast, the nofollow attribute is used to tell search engines not to pass any ranking signals or authority through the link. Nofollow links do not influence the target page's ranking because Google does not treat them as valid voting signals. These types of links are commonly used in situations where passing link equity is not desired, such as external links in comment sections, advertisement links, or paid links. By using nofollow, websites can avoid passing authority to irrelevant or low-quality pages, thereby reducing the risk of spam or over-optimization.

(1) Technical Implementation of Nofollow

For websites built with WordPress.org, adding the nofollow attribute to outbound links requires no extra coding—WordPress natively supports this feature. Specifically, when adding anchor text in the post editor, there is an option to “mark as nofollow” in the link settings. On standard pages using Elementor’s page editor, you can also click the link settings button next to the link input field and simply check the “Add nofollow” option in the dropdown menu.

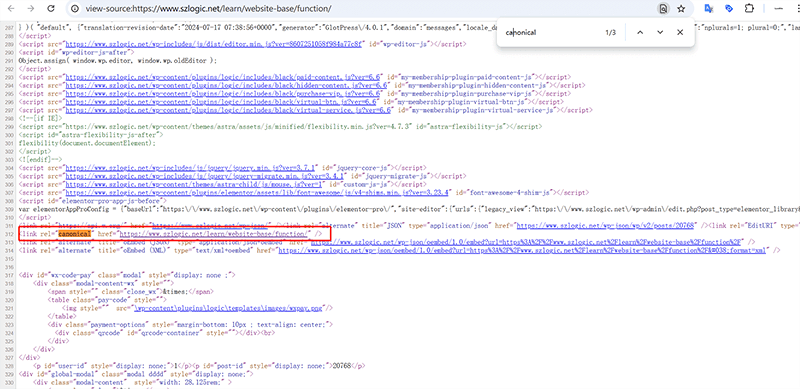

7、Canonical Tags

In the image above, the red box highlights the specific application of the canonical tag on an article page. The canonical tag is an HTML element that informs search engines about the preferred version of a page. When a website has multiple pages with similar or duplicate content, the canonical tag helps search engines identify the most authoritative version by pointing to the original page. Typically, the canonical tag is placed in the section of the page, linking to the original version’s URL, preventing search engines from being confused by duplicate content in indexing. In SEO, the canonical tag plays a crucial role. Firstly, it helps avoid duplicate content indexing issues, preventing multiple similar pages from affecting a site’s ranking. If search engines perceive similar pages as duplicate content, they might choose to index only one of the pages, ignoring the others, which would hurt the performance of those pages in search results. By using the canonical tag, a site can clearly tell search engines which page should be considered the original version, ensuring that all SEO value is concentrated on the preferred page.

The canonical tag can also optimize the site's link structure and traffic distribution, especially when multiple pages display the same content (such as product pages, category pages, or tag pages on eCommerce sites). By consolidating the authority to one main page, it helps improve the ranking of that page in search results. Additionally, it's important to note that ordinary pages don't frequently use the canonical tag because their URL structure typically only involves hierarchical relationships, rather than category or tag pages, thus avoiding multiple URLs. However, product pages and article pages are different; they often involve categories and tags. Therefore, product and article pages are the most frequent users of the canonical tag and are the pages that need special attention.

(1) Technical Implementation of the Canonical Tag

The implementation of the canonical tag follows the same principle as the title, description (meta description), and meta property tags mentioned above. It is achieved by using PHP to call WordPress built-in functions and utilizing hooks and filters. This process generates a canonical input field in the page management mode, where the filled URL is output to the front-end code, allowing search engine crawlers to crawl and index the page.

8、Index and Noindex Tags

The front-end HTML code marked with a red box in the example above shows the actual application of the noindex tag. The "index" and "noindex" tags are used to control whether search engines should index the page's content. However, there is no need to specifically mark "index" on a page, as by default, if there is no "noindex" tag, it indicates that search engines are allowed to index the page's content, meaning the page's content will be added to the search engine's index and may appear in search results. On the other hand, the "noindex" tag tells search engines not to index the page, meaning the page's content will not be crawled or stored by the search engine, and therefore, will not appear in search results. In SEO, the "index" and "noindex" tags play an important role. Using the "index" tag ensures that the page can be indexed by search engines, improving its visibility in search results, which is the default setting for most pages. However, in some special cases, such as pages that have no value for search engines to crawl and index (e.g., login pages, thank you pages, privacy policy pages, etc.), using the "noindex" tag can effectively prevent these pages from appearing in search results, thereby focusing the search engine's crawling and indexing resources and avoiding irrelevant pages from affecting the overall SEO performance of the website.

(1) Technical implementation of the noindex tag

Similar to the programming logic of the other tags mentioned above, the `noindex` attribute for robots can be output to the front-end page code by writing the relevant output information code in the WordPress theme files.

9、Schema

In the image above, the red box highlights the Schema code written and deployed to the page. As shown in the image, the Schema is written using JSON syntax. This structured data helps search engines better understand the content of the webpage, thereby improving the page's display in search results. Schema is not a single standard; it has two main types: Google Schema and Schema.org. There are some key differences between them, but their goal is the same: to optimize the presentation of website content in search engines and improve SEO performance.

(1)Schema.org

Schema.org is an open standard jointly promoted by Google, Bing, Yahoo, and Yandex and other search engines. Its purpose is to provide a unified framework for structured data, allowing different search engines to better understand and process webpage content. Schema.org offers a broader range of categories and attributes, suitable for various types of content such as articles, events, products, organizations, etc. It uses three formats—JSON-LD, RDFa, and Microdata—to represent data. Although Google Schema can also be considered a part of Schema.org, Schema.org, as an open standard, is more flexible and universal, suitable for multiple platforms and search engines.

(2) Google Schema

Google Schema is a structured data standard developed by Google for its search engine, typically used to provide more information in search results. For example, Google Schema supports formats like products, reviews, company information, etc., which can directly affect how search engines display information, such as rich snippets (rich snippets) or card-style search results. Google Schema typically includes specific fields and formats designed to directly support Google's search engine optimization and search result display features.

(3) Schema coding and deployment code

After selecting Google Schema or Schema.org, the next step is to write or modify the relevant Schema code according to the structured data syntax released by the official sources. Schema coding follows a specific JSON-LD format, which is the structured data format recommended by Google and other search engines. When writing the code, developers need to choose the appropriate Schema type based on the specific content type. For example, for article pages, the Article type can be used, and for company websites, the Organization type can be used. These types include several attributes such as name, URL, description, address, etc. Developers need to replace these fields with specific information relevant to their website content. After writing the structured data code, the next step is to deploy the code into the WordPress theme files. Typically, this Schema code is inserted into the header.php file, and it is output into the tags of every page. This way, whenever visitors load the site, the browser automatically loads these structured data, and search engines can crawl this information. In WordPress, the deployment process is not complicated, as the JSON-LD code can be directly embedded in the theme files or automated using plugins.

(4) Schema testing

After deploying the code to the frontend page, developers can use Google's structured data testing tool to check if the Schema is implemented correctly and whether rich snippets (such as ratings, reviews, events, etc.) are displayed successfully. Google's Schema testing tool is Rich Results Test, and Schema.org's testing tool is Schema Markup Validator. Both tools allow testing by using a URL or directly pasting the schema's JSON code. Schema (structured data) is crucial for improving a website's visibility in search engines because structured data not only helps search engines understand the page content but also enhances the display format of search results, thus improving click-through rates.

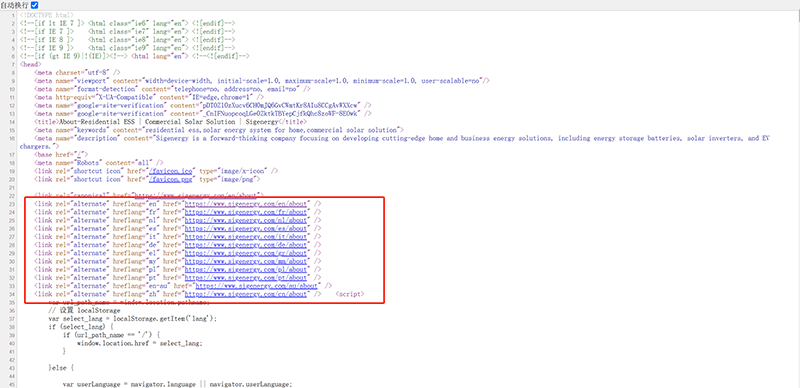

10、Hreflang Tags

The specific syntax and arrangement format for outputting the hreflang tag information to the front-end page code is shown in the red box in the image above. The hreflang tag is an HTML element used to tell search engines the language and regional targeting of a specific page, helping search engines identify the appropriate content version for specific users. The hreflang tag is especially important for multilingual websites, as it clearly indicates which language or regional version of a page is intended for users in a particular region, ensuring the correct page version appears in search results. For example, if a website offers content in both English and Chinese, using the hreflang tag ensures that the English page is shown to users in English-speaking countries, while the Chinese page is shown to Chinese-speaking users. In SEO, the hreflang tag helps search engines avoid treating pages with similar or identical content as duplicate content, as different versions of a multilingual site may contain overlapping content. Without the hreflang tag, search engines may mistakenly index and display the wrong page version, which not only affects user experience but could also negatively impact SEO rankings. By properly using the hreflang tag, websites can increase their visibility in specific regions and languages, ensuring that each page is displayed to the correct target audience.